The 128k Token Illusion

Claude has a 200k context window. GPT-4 Turbo offers 128k. Gemini boasts even larger capacities. But here's the uncomfortable truth: you're probably only getting value from 10-20% of that capacity.

This isn't a limitation of the models—it's a fundamental characteristic of how attention mechanisms work, and understanding it will transform how you build AI applications.

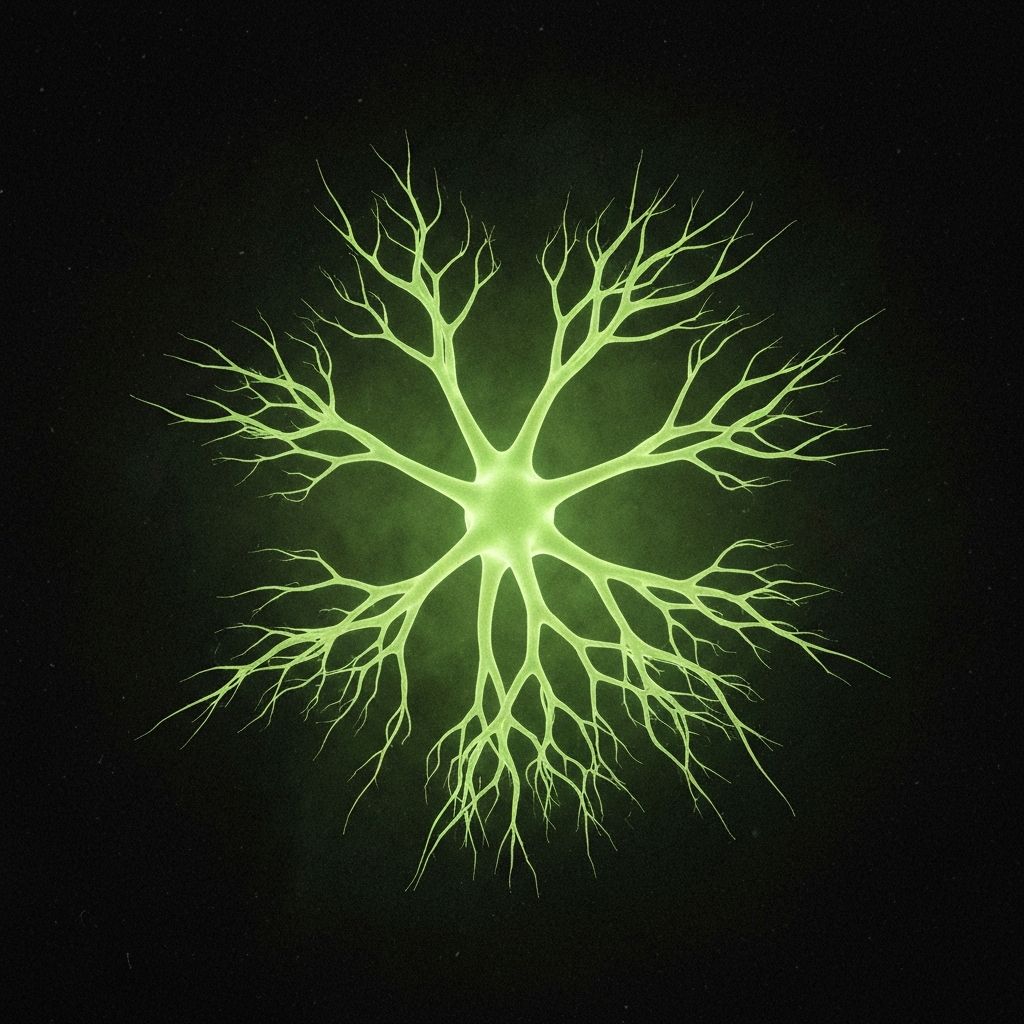

The Attention Decay Problem

Attention isn't uniform across the context window. Research consistently shows that LLMs exhibit strong primacy and recency biases:

- The first ~2000 tokens get disproportionate attention

- The last ~2000 tokens are well-attended

- Everything in the middle? It's a graveyard.

Attention Distribution:

[████████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░████████]

^high high^

^^^^^ the "lost middle" ^^^^^

Practical Implications

This means if you're stuffing your context window with 100k tokens of retrieved documents, you're wasting compute. Most of that information won't meaningfully influence the output.

The Effective Context Budget

For most production use cases, I recommend thinking in terms of an effective context budget:

| Context Region | Token Budget | Purpose |

|---|---|---|

| System prompt | 500-2000 | Persona, constraints, format |

| Core context | 2000-4000 | Essential reference material |

| Dynamic context | 1000-2000 | Session state, recent history |

| Query context | 500-1000 | Retrieved chunks, immediate data |

| Buffer | 500-1000 | Response generation space |

That's 5-10k tokens of high-impact context—even when your model supports 100k+.

Strategies for Context Optimization

1. Aggressive Summarization

Don't pass raw documents. Summarize them contextually:

// Instead of raw retrieval

const chunks = await vectorDB.search(query, k=20);

// Do this

const relevantChunks = await vectorDB.search(query, k=5);

const summarized = await llm.summarize(relevantChunks, {

focus: query,

maxTokens: 500

});

2. Strategic Positioning

Place critical information at the beginning and end of your context. The middle should contain "nice to have" information that can be degraded without catastrophic failure.

3. Chunking for Attention

Smaller, discrete chunks with clear boundaries get better attention than walls of text. Use formatting to create attention anchors:

## Key Fact 1

[Content]

## Key Fact 2

[Content]

The Mental Model Shift

Stop thinking of context windows as "how much I can fit" and start thinking "how much the model can effectively use."

This shift has profound implications for:

- Cost optimization — Fewer tokens, lower bills

- Latency reduction — Less to process, faster responses

- Quality improvement — Better attention on what matters

Next up: Building Memory Systems for Agentic AI. How do you maintain context across multi-turn, multi-agent workflows?